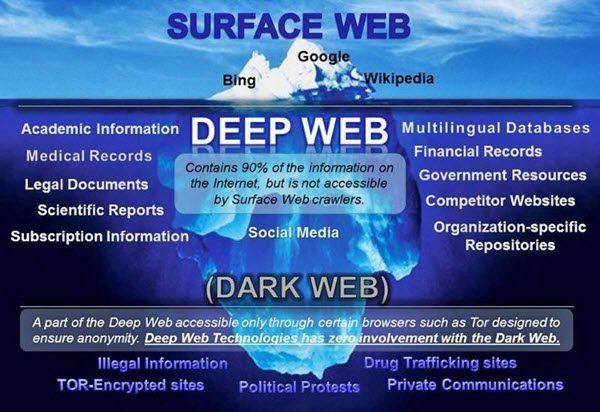

It is a false assumption to think that Google searches the entire web when you input your search. Far from this. It searches the tip of the web, i.e. the Surface or Visible or Open Web. Between 5 to 10% of the whole web. Google (and other standard web-search engines) cannot reach the Deep and Dark webs.

The peak of the iceberg — in the depths of the Internet | by Aleksa Jadžić | Medium

The reason is very simple. Google searches the pages that its bots and spiders have previously crawled, indexed (i.e. categorized and stored), and ranked (i.e. prioritized). Two main processes are at work when you google: the search itself (which you initiate when you enter your search string), and everything that the search engine has already done (crawling, indexing, and ranking) so that your results can come up quickly. It is also wrong to think, that Google (and the likes) search the web live. Because of the web’s intergalactic size, it would be impossible to do this in a reasonable time.

To know more, check our different Tips :

- Google 3 basic mechanisms (crawling, indexing, and ranking)

- How to access four major types of resources Google does not access (with links)

- The 3 webs (visible, deep, and dark)

It is not only the sheer size of the web that makes it impossible to search it exhaustively and in real-time, but also a number of technical issues that prevent Google (and the likes) from crawling and indexing a very wide range of online resources.

- Some websites specifically indicate their desire not to be crawled/indexed (web designers include instructions in their programs, such as robots.txt or noindex).

- Some are too poorly designed, do not include links on their first page, or have broken lines or corrupted codes.

- Some take too long to load.

- Some are black-listed. Google Transparency Report https://transparencyreport.google.com/?hl=en mentions the delisted websites (+ other information, such as how Google handles content removal, user data disclosure, security issues, government requests, etc). To know more about this, websearch “blacklisted website google transparency report”.

- Some are dynamic, i.e. they generate and display the content on the fly, based on the actions made by the users. A large part of these comes from databases that are internal to websites, and which data is dynamically generated upon the user’s request. This is the case with many official data (government, supra-national, trade associations…), directories, repositories, academic and scientific information, etc.

- Many websites contain protected information (password protected), for which one needs to subscribe (for free or for a fee). These represent a huge part of the web. Many of these are professional information resources, for which you need to pay.

Concretely, here are some examples of online content that remains inaccessible to Google and other standard web-search engines

| (A) | Your medical, banking, and other personal records are usually well protected and encrypted (something every user would agree with). |

| (B) | Most forms, because their content is usually dynamic. |

| (C) | Not long ago, search engines were still leaving out web content that was based on file formats other than HTM. Now they can index a large array of files. Google can search the following file formats: .pdf, .ppl, .ps, .doc, .rtf, .xls, .dwf, .kml, .kmz, and .swf, but are not able to read compressed zip files yet (end 2021). |

| (D) | Your private Facebook profile, your Amazon content, your Edit profile page on Instagram, etc. |

| (E) | Many databases-based websites (with extensive data banks). Those of government (most of them have a statistics website full of free data), of the OECD Data, Data overview – IEA, WTO Data – Information on trade and trade policy measures, of United Nations agencies (WHO, Statistics and databases (ilo.org), UNHCR – UNHCR Data, ITU-D ICT Statistics, Data for Researchers (wipo.int), UNICEF DATA – Child Statistics, IMF Data, World Bank Open Data | Data, Data | UN Women Data Hub… for a full list, check UN System, etc. Much of their content is usually generated dynamically (in opposition to static webpages). Standard web engines can locate the entities, but cannot extract information from them, in other terms, they cannot mine this information using their crawling tools. You need to go to their websites to search for their data. |

| (F) | Many professional information providers. They are registration based, i.e. you need to subscribe to (and usually pay for their information). Some examples: Factiva/DowJones (press), Bloomberg, Factset, Gartner, WoodMac, Emis, etc. They represent a very large part of the web. These represent a very large part of web information. |

Google cannot mine these resources, in other terms, their respective data will not show up in your Google result pages (or only very few of them). It cannot search for data embedded in a website database. Considering the wealth of information contained in (E) and (F), no information pros should ignore them. Here is the general way to access them.

- You can directly navigate to their website (if you know their URL) and search for a data or statistics tab. In it, you find its proprietary search engine which allows you to drill down into their rich data, applying many criteria.

- You can Google the name of the entity + data or database or statistics and then search within its data or statistics section or tab.

- If you don’t know the name of the entity, and using Google, input the type of data you need, for example, telecommunication and statistics, you should get among others, the ITU entry (which is the United Nations entity for the International Telecommunication Union). Another example, if you are interested in trade data, input trade and statistics or data, you should get the WTO (World Trade Organization), as well as many other trade associations or treaties

The idea is that you navigate to these websites, then search within. This is the way you access these (E) & (F) rich resources. They are, for many, free and official, so rather reliable, even if to exploit their data correctly, the usual vigilance and some basic statistical understanding are required. If uncomfortable with statistics, know that many of these websites have some help pages and/or some contacts; they also have become much more straightforward and intuitive to use. Check our rich Tip/Resources for links to many of these resources, as well as our Tip/Tools on the 3 webs and Google mechanisms.

Finally, it should now be evident to you, that when you type a query into Google and the likes, these search a very small portion of the web, the webpages their spiders and bots have already crawled, indexed, and ranked. They leave out much data that you need to access from their respective website. Even if Google proudly boasts that its Search index contains hundreds of billions of webpages and is well over 100,000,000 gigabytes in size, remember that you are far from reaching the whole of the web, when you google. The world is very large beyond google and the likes.